On November 30, 2022, OpenAI, in collaboration with Microsoft, ushered in a technological revolution that had been long anticipated: the launch of ChatGPT. This event marked a significant milestone in the era of Artificial Intelligence (AI), one that many believed was on the horizon but had yet to be realized. For years, tech giants like Google, Yahoo, and others have been working tirelessly with big data to create AI systems capable of transforming the world. However, the advent of ChatGPT signaled a profound leap forward, making AI more accessible and practical than ever before.

“ChatGPT revolutionized various tasks by making processes like data analysis, code generation, website development, and content creation significantly faster and more efficient. This advancement sparked innovation across the industry, leading to new AI tools like Google’s Gemini. However, as with any powerful technology, AI also presents challenges. While it enhances productivity, it can be misused for unethical purposes, such as creating fake documents, deepfakes, and manipulated images, raising concerns about its potential for generating harmful content.”

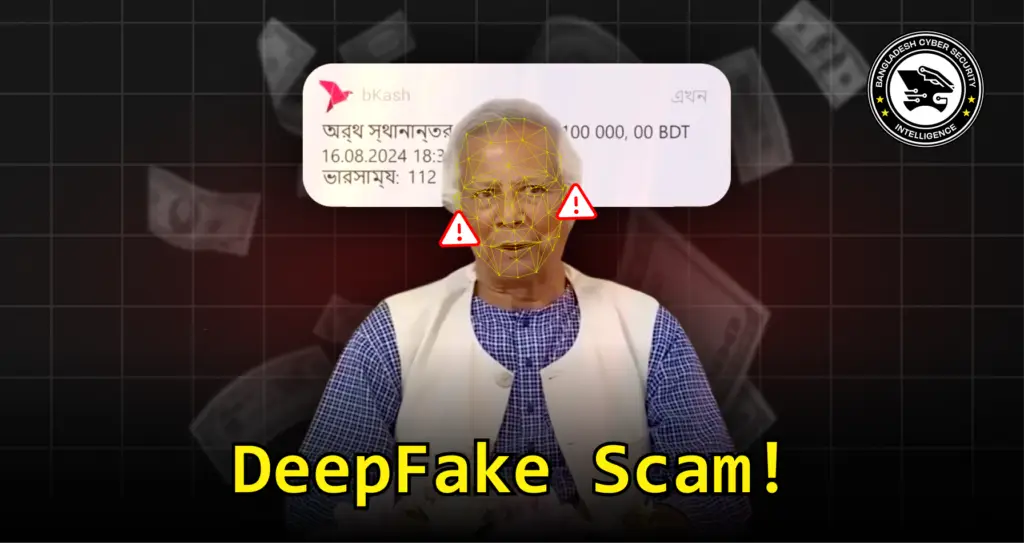

The misuse of AI has grown beyond images, extending into the realm of video and audio manipulation. With deepfake technology, convincing yet entirely fabricated videos of individuals can be created, often featuring scripted dialogues synced to the lips of the subjects. This technology has been weaponized to produce fraudulent content, including fake videos of well-known personalities such as Mr. Beast and Warren Buffet. Recently, a deepfake video surfaced in Bangladesh falsely claiming that Dr. Mohammad Younus, the Chief Advisor of Bangladesh, had legalized casinos and developed a casino app with a “100% winning rate.” A closer analysis of the video reveals telltale signs of AI manipulation, particularly in the synthetic voice and unnatural accent.

These incidents highlight the darker side of AI’s rapid advancement. While generative AI holds immense potential for positive impact, its capabilities can be easily turned towards malicious purposes. The proliferation of deepfake videos, counterfeit news, and fraudulent schemes illustrates the urgent need for stricter regulations, ethical guidelines, and public awareness. As AI technology continues to evolve, so too must our understanding of both its benefits and its dangers. Balancing innovation with responsibility is critical to ensuring that the future of AI remains beneficial and trustworthy for all.